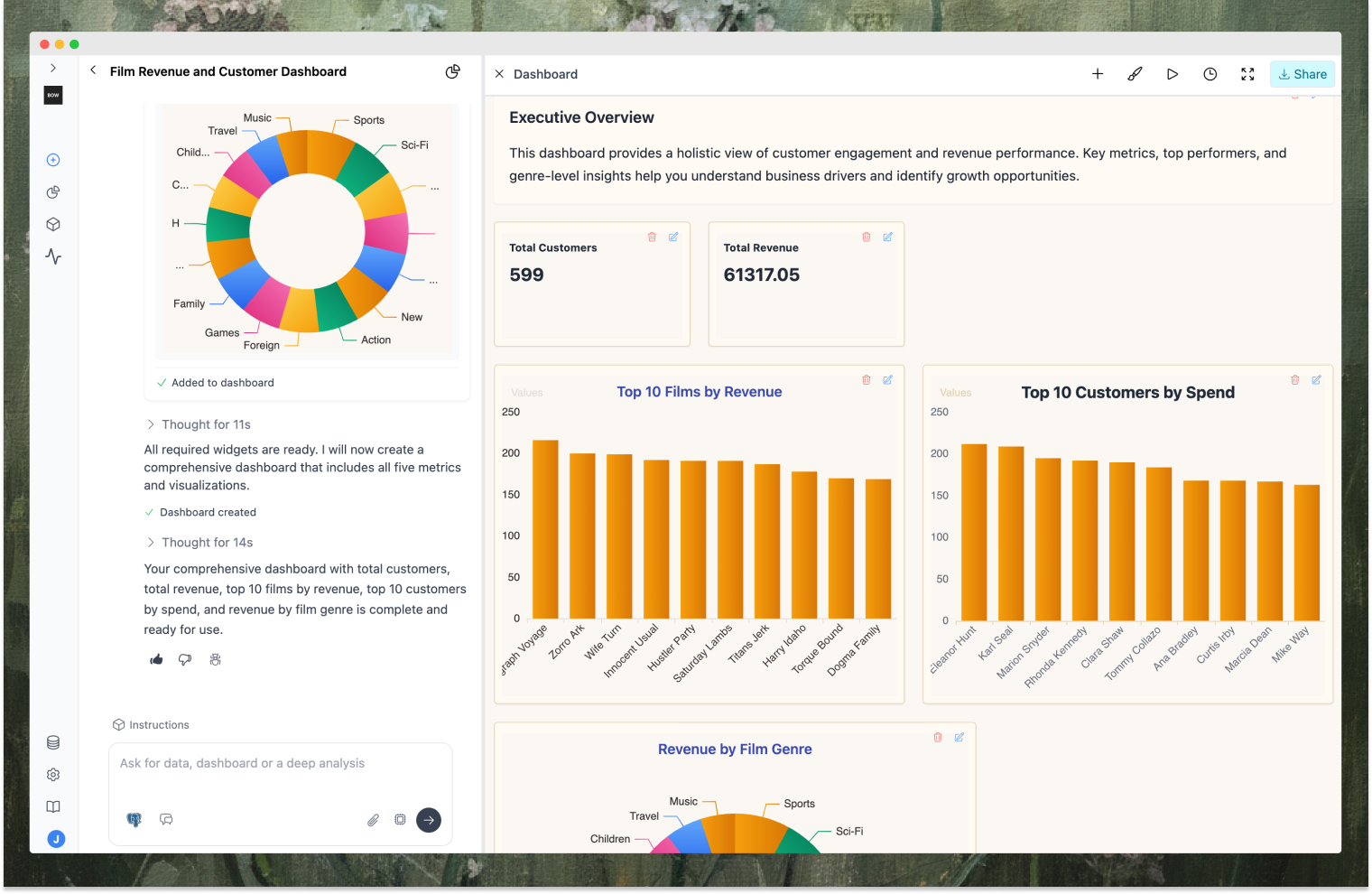

Set up an open-source AI analyst for PostgreSQL in 2 minutes

Context-aware, customizable, and self-improving — an open-source AI analyst for PostgreSQL that builds dashboards you can trust

AI is going to be the interface for data - that’s clear. But most teams aren’t running AI analysts in production yet. They’re stuck experimenting because the AI doesn’t understand their business context, answers are inconsistent, and there’s no way to see what’s breaking.

Bag of words is an open-source framework that solves this. Deploy an AI analyst on PostgreSQL with full observability and control. Customize the context by teaching it your business definitions, connect your dbt models, BI, and documentation, and watch it improve over time as it learns from usage patterns and feedback. Your team asks questions in plain language and gets dashboards that actually make sense. Setup takes a few minutes.

Here’s how to do it.

Prerequisites

Before you begin, you’ll need:

- Docker installed on your machine (installation guide)

- A PostgreSQL database (local or remote) with some data

- Your Postgres connection string ready (e.g.,

postgresql://user:password@host:5432/database) - An API key from your preferred LLM provider (OpenAI, Anthropic, Azure OpenAI, or Google)

Step 1 — Deploy Bag of words

Let’s start by deploying Bag of words locally using Docker.

Run the following command:

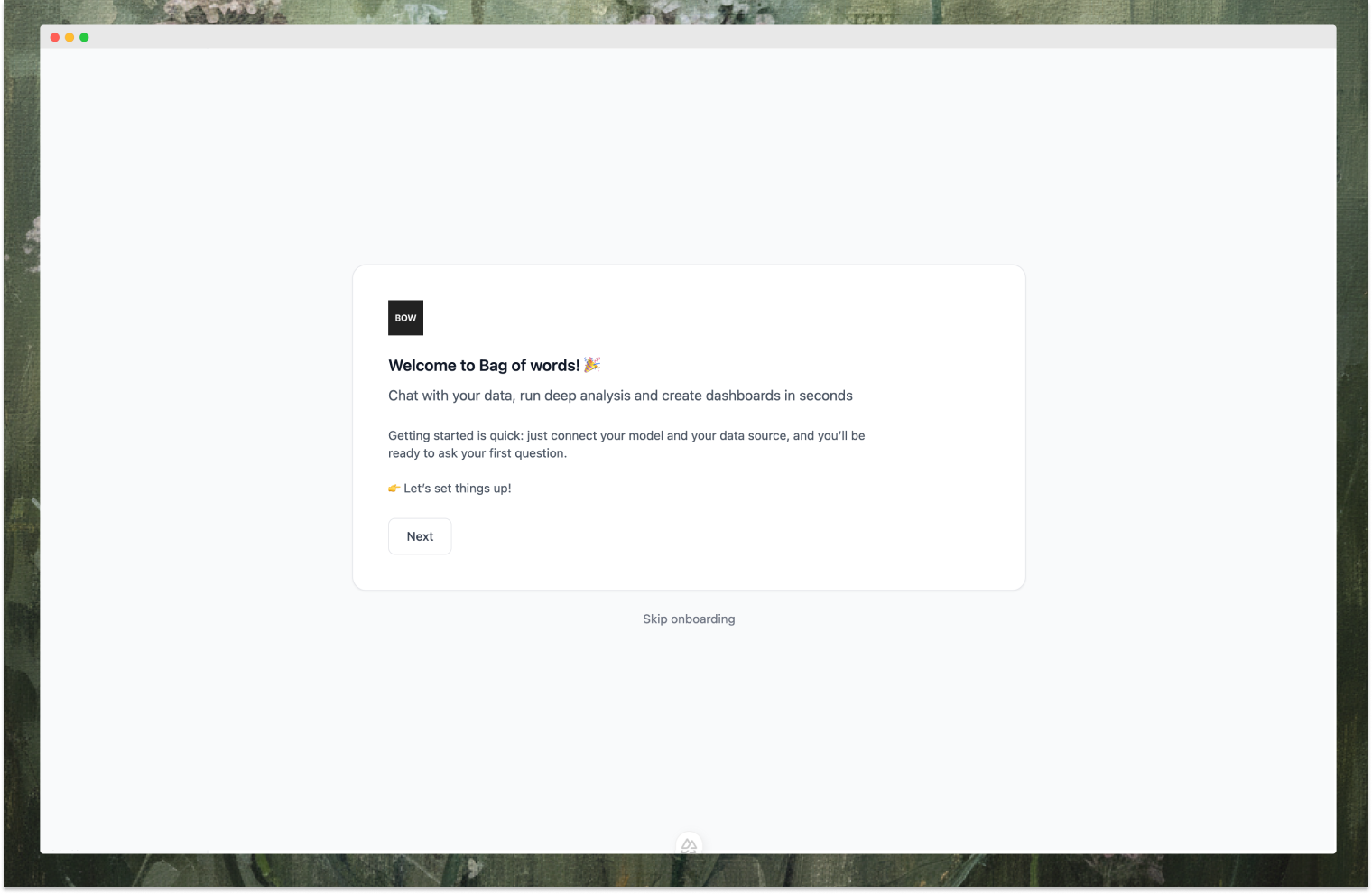

docker run --pull always -d -p 3000:3000 bagofwords/bagofwordsAfter a few seconds, the service will be running. Open your browser and navigate to:

http://0.0.0.0:3000You’ll see the Bag of words onboarding flow. Let’s walk through it.

Step 2 — Configure Your LLM

The first step in onboarding is connecting to an LLM provider.

- Choose your LLM provider: OpenAI, Anthropic, Azure OpenAI, or Google

- Enter your API key

- (Optional) Select a specific model (e.g.,

GPT,Claude) - Click Test Connection to verify

- Click Next

Why this matters:

Bag of words is LLM-agnostic. You bring your own key and choose your provider. This gives you control over cost, performance, and data residency.

Step 3 — Connect Your Data Source and Select Tables

Now let’s connect to your PostgreSQL database.

Connect the Data Source

-

Select PostgreSQL from the list of available data sources

-

Enter your connection details:

- Name: Something descriptive like “Production Analytics”

- Host: Your database host (e.g.,

localhostordb.example.com) - Port:

5432(default) - Database: Your database name

- Username and Password

- Or paste your full connection string

-

Click Test Connection to verify

-

Click Next

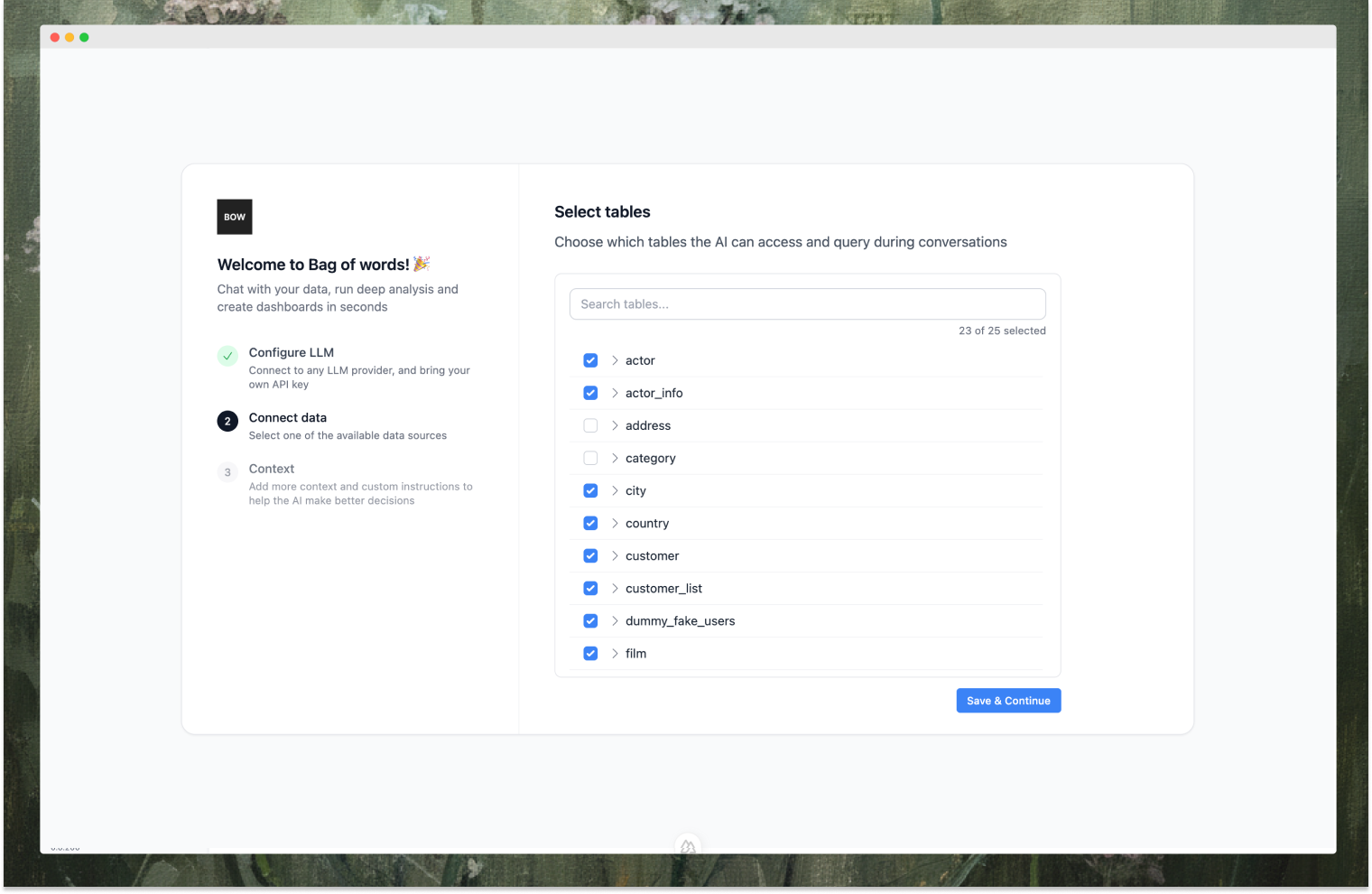

Select Tables

After connecting, Bag of words introspects your database schema. You’ll see a list of all available tables.

Choose which tables the AI can access:

Choose which tables the AI can access:

- Select all tables if you want the AI to have full visibility

- Or pick specific tables based on what your team needs

- You can always adjust this later in Settings

This table selection impacts AI performance. Start with a few relevant tables and gradually add more as needed. Fewer tables means more focused context and better query accuracy.

Click Next when ready.

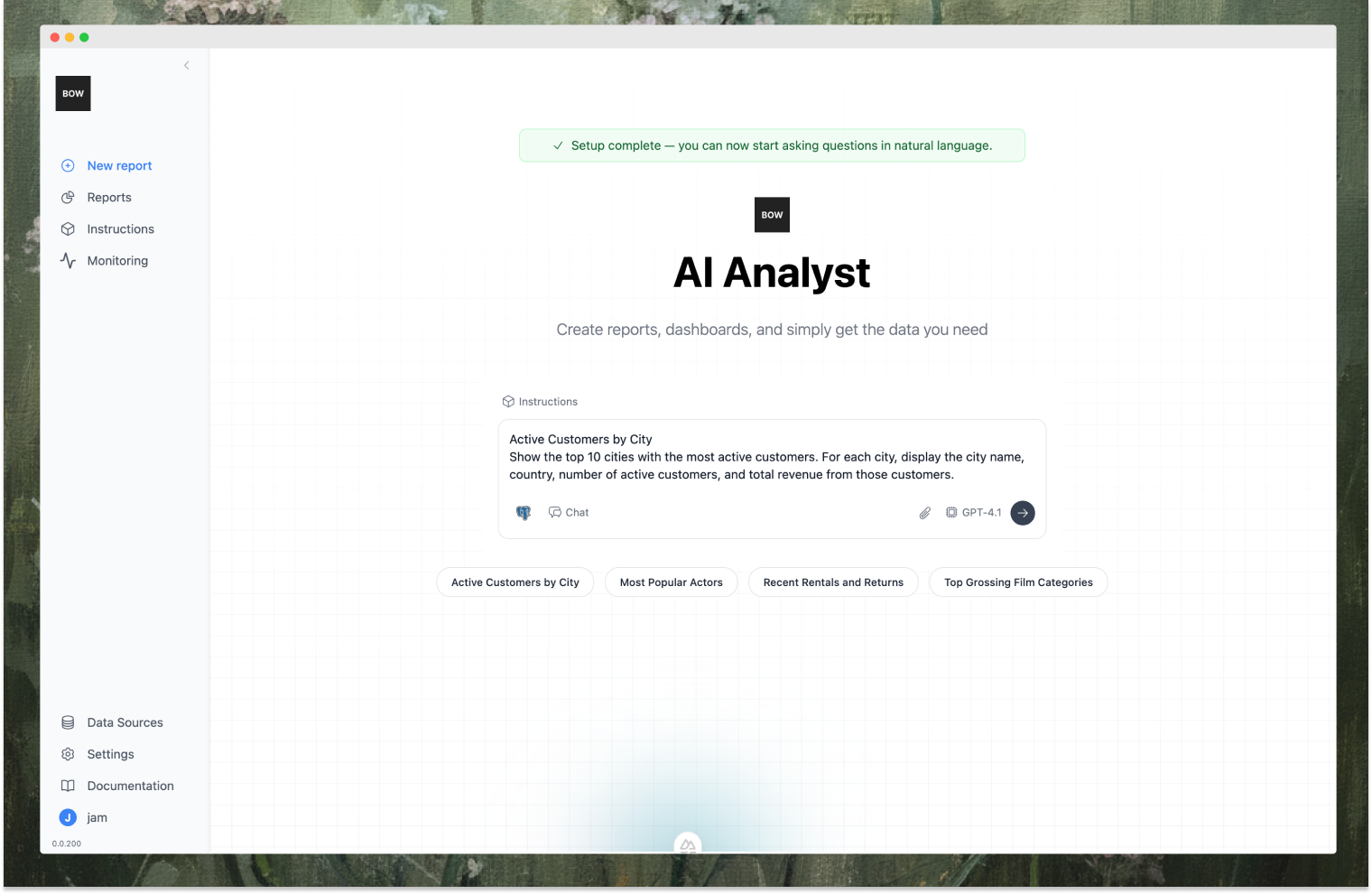

Step 4 — Ask Your First Question

The onboarding is complete! Now you can start asking questions.

The interface will show you some conversation starters to get you going, or you can type your own question in plain English.

Let’s try an example:

Show me a line chart of daily active users for the last 30 daysHit Enter and watch what happens.

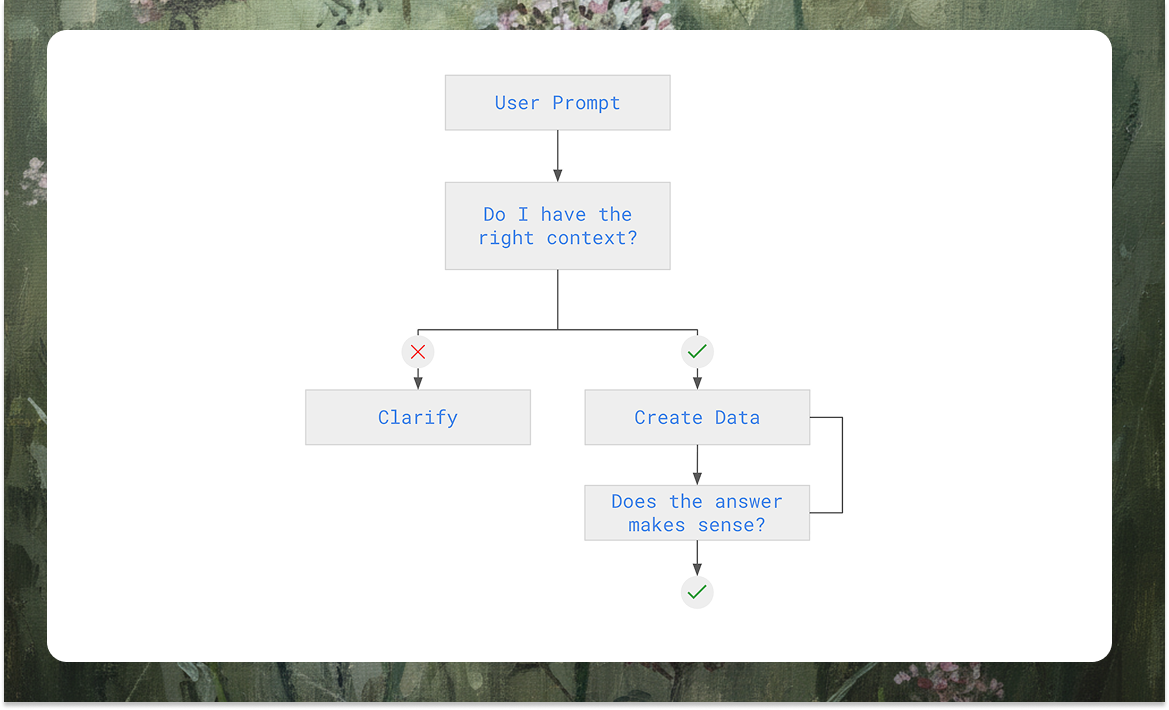

Behind the scenes, the AI agent:

- Reads your question

- Examines the database schema and context

- If the agent feels low confidence/context is not enough, it stops and asks for clarification. Otherwise - continue

- Plans a data model and generates code

- Executes it against your Postgres database

- Returns the result as a table and (if appropriate) a chart

You’ll see the data model being constructed in real time, followed by code generation and execution to get the data. Then you’ll get a line chart showing the trend.

When Questions Are Ambiguous

If your question is ambiguous, the AI will ask for clarification before proceeding. For example:

Show me revenue by regionThe AI might respond: “I found both gross_revenue and net_revenue columns. Which one should I use?”

You clarify: “Use gross_revenue”

The AI then generates the correct query and returns your chart.

Here’s the best part: After answering your question, the AI will suggest an instruction based on the clarification:

“When user asks for data related to ‘revenue’, by default use

gross_revenue”

Click Accept, and this rule is saved as an instruction. Next time anyone asks about revenue, the AI will know what you mean—no clarification needed.

This feedback loop means the system gets smarter over time, learning your business logic with every interaction.

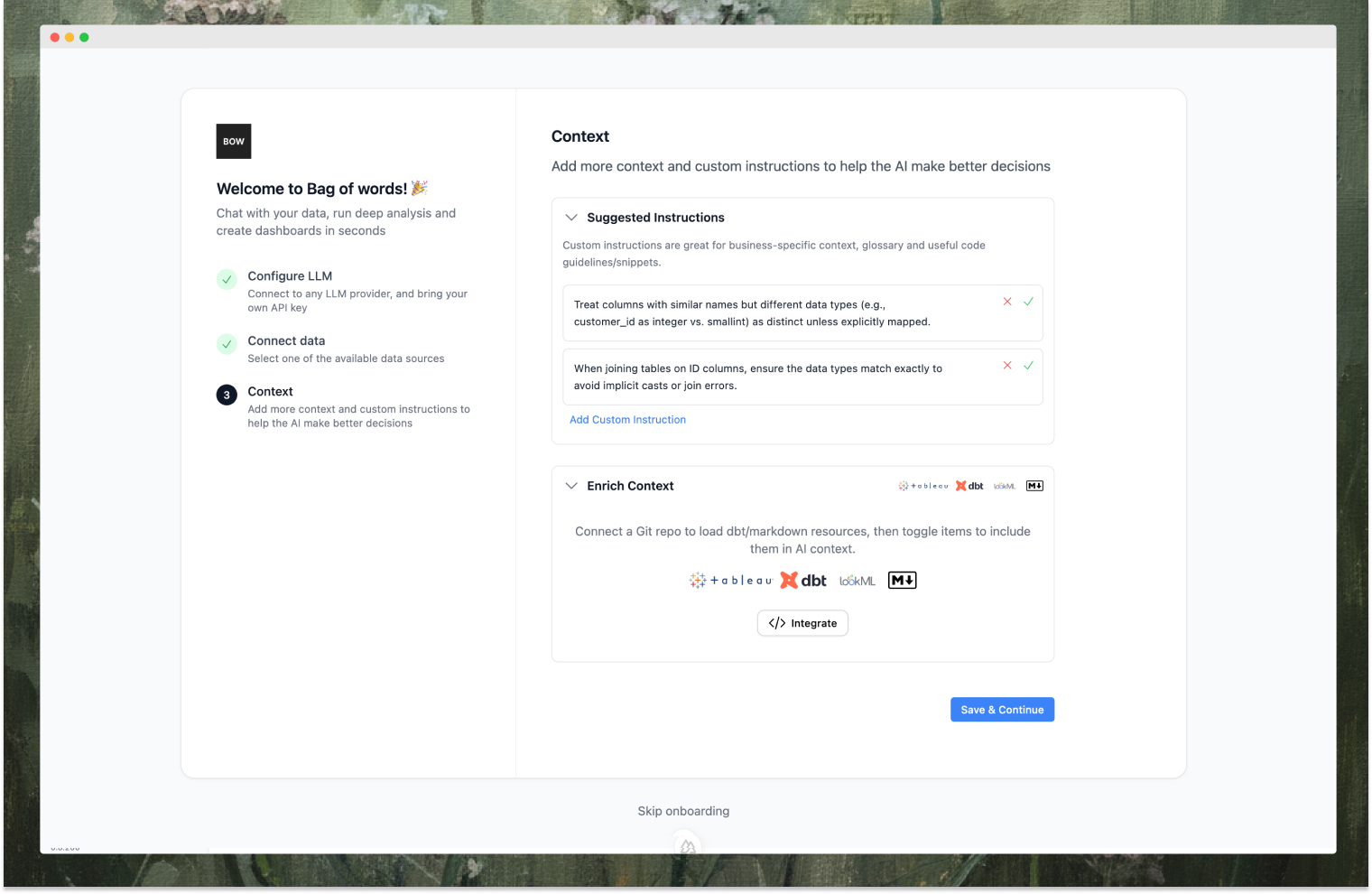

Step 5 — Add Context to Improve Accuracy

This is the key to reliable AI analyst deployments. The solution is only as good as the context you build.

Context comes from two sources:

- Machine-generated — Usage patterns, clarifications, and learnings from production queries

- Human-provided — Instructions, dbt docs, and semantic layer enrichments

Add instructions: Click Instructions above the prompt box. Write business rules in plain language like “Revenue means gross_revenue” or “Active users have last_seen_at within 30 days”. These apply to every query.

Connect your semantic layer: Go to Integrations → Context and connect your dbt project, LookML files, or markdown documentation. The AI will automatically index your models, descriptions, and relationships—then reference them by name when generating queries.

Together, these create a knowledge base that makes the AI increasingly accurate and aligned with your business logic.

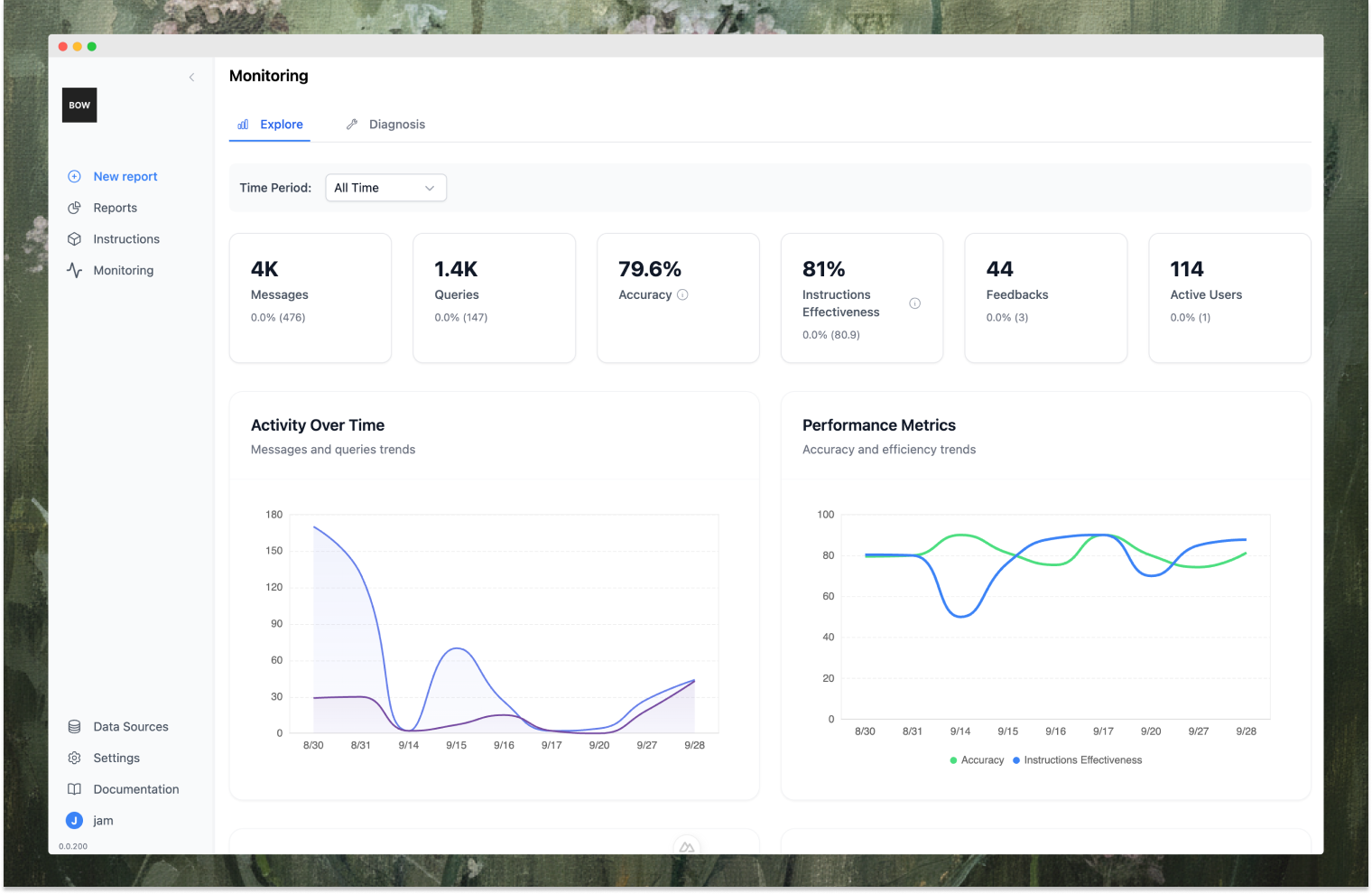

Step 6 — Monitor and Track AI Analyst Quality

Everything is tracked. Go to Monitoring to see a complete audit trail of all AI interactions—every query, every clarification, every piece of feedback.

Key Metrics to Track

While the product exposes many detailed metrics, here are the high-level indicators you should monitor:

- Context coverage — Frequency with which the context (instructions, enrichments) equips the agent with adequate confidence for your prompt

- Accuracy (judge) — Automated quality scores from AI judges evaluating correctness

- Negative feedback — User thumbs down signals that need investigation

- Clarification rate — How often the AI needs to ask for clarification

These metrics tell you whether your AI analyst is production-ready and where to focus your context-building efforts.

Conclusion

In just a few minutes, you’ve deployed an open-source AI analyst on PostgreSQL, connected your database, asked natural language questions, added business context, and learned how to monitor and evaluate query quality.

Unlike black-box AI SQL tools, Bag of words gives you the tools to actually get to production. Every query is traceable. Every decision is visible. You can see when your metrics show it’s ready, and you control the context, the LLM, and the governance rules.

What You’ve Built

You now have:

- A natural language interface to your Postgres database

- Context-aware query generation using your business definitions

- Full observability into how the AI reasons and what SQL it generates

- A foundation for building dashboards, Slack bots, or embedded analytics

Next Steps

From here, you can:

- Invite members and manage permissions set governance for data sources and reports

- Integrate with Slack to let your team ask questions directly in channels

- Build dashboards by saving queries and pinning them to a shared view

- Customize the LLM (swap OpenAI for Anthropic, Gemini, or a self-hosted model)

- Deploy to production using Docker Compose or Kubernetes in your own VPC

To learn more:

AI is becoming the interface for data—but only if it’s trustworthy. Now you have the tools to make it so.