Semantic Layers Are Bad for AI

How rigid data models limit AI reasoning, and how rethinking semantic layers can turn control into context

The title’s a bit click-bait, but bear with me. A proper semantic layer is often hailed as the holy grail for data organizations: self-service analytics with centralized quality control, enabling non-technical users to explore data safely.

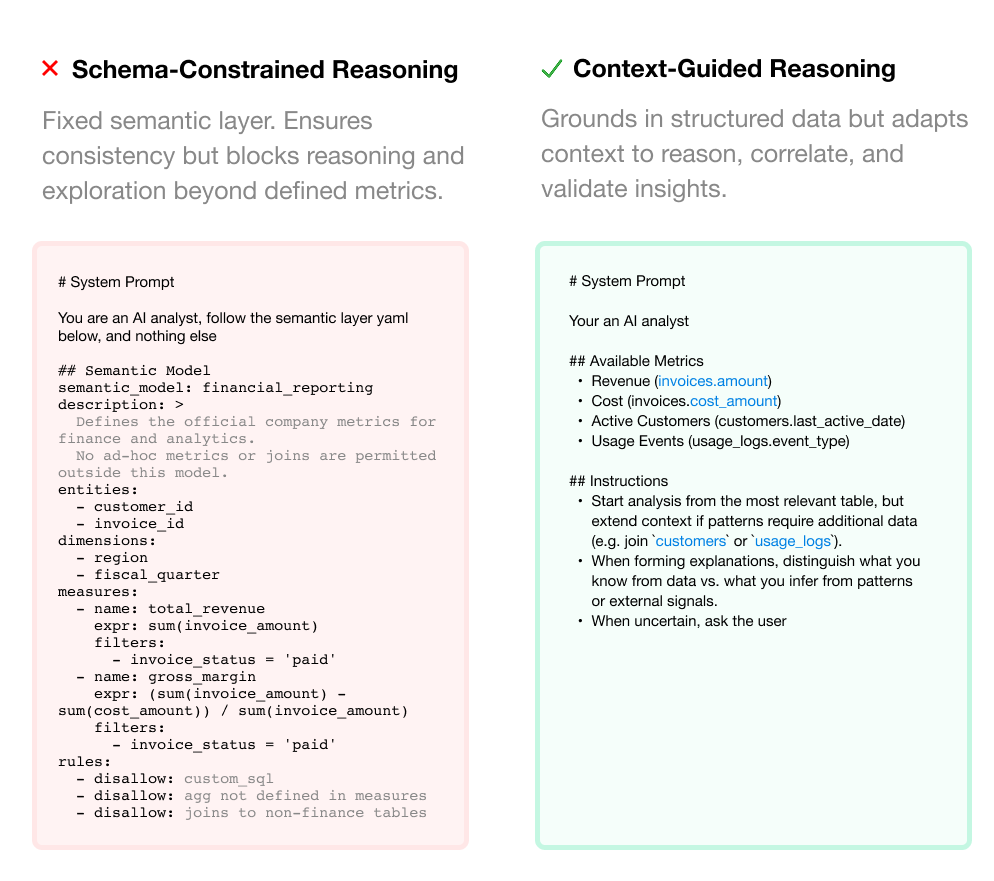

That model works beautifully for BI dashboards, but when applied to AI reasoning, the same rigidity that protects metrics ends up suffocating reasoning.

Why it’s bad for AI

BI semantics were designed for reporting truth, not discovering it. They make data reliable and consistent, exactly what business intelligence needs. But AI systems reason differently. Their strength lies in generalization, creative hypothesis, and multi-step inference. A capable AI analyst shouldn’t just restate what happened, it should explore why it happened.

Example: The perfectly wrong answer

> User: Why did we have a drop in sales last quarter?

> AI: Sales dropped by 8%.

> User: Right… but why?

> AI: Because total sales were lower than the previous quarter.The AI isn’t wrong, it’s limited. The semantic layer gave it access only to aggregated metrics, so it can describe outcomes but not reason about causes. It can’t explore whether the drop came from a single product line, a campaign change, or a regional shift, all context that sits outside its defined schema. As an end user, you can’t help but ask: what’s the value over a dashboard?

You can keep extending the layer with more joins and filters, but each patch adds rigidity and complexity. Over time, the schema grows and the rules are heavy while the model’s ability to think/reason within it shrinks. Too much structure prunes reasoning, too little leads to drift. The goal is lighter scaffolding, just enough to ground the model without dictating how it thinks.

How to balance?

You don’t need to discard your existing BI or semantic investments. You need to change their purpose. In AI systems, the semantic layer should guide, not enforce. It provides structure and definitions to ground the model’s reasoning, but it can no longer dictate how that reasoning unfolds.

Since every token in context matters, the layer itself must be rethought. Remove rules, filters, or joins that are too rigid or unnecessary. Keep only what adds clarity or meaning. The goal is a leaner, more expressive structure that helps the model reason without being boxed in.

Practically speaking, use retrieval to pull the relevant models or metrics from your BI system, restructure them within the prompt, and fuse them with natural language instructions. This keeps the model grounded in truth while preserving the flexibility it needs to think.

Practically speaking, use retrieval to pull the relevant models or metrics from your BI system, restructure them within the prompt, and fuse them with natural language instructions. This keeps the model grounded in truth while preserving the flexibility it needs to think.

Anthropic describes this balance in their article on “Effective Context Engineering for AI Agents”. The “right altitude” for prompts lies between two extremes. Too much hardcoded logic makes systems brittle, while overly broad guidance leaves them lost. The best systems guide reasoning, they don’t constrain it.

Summary

AI doesn’t make the semantic layer irrelevant, it transforms its purpose. It’s no longer about enforcing rules but guiding reasoning. Keep the shared definitions, drop the constraints. The next evolution of data systems won’t just report truth, they’ll reason with it.

—

Join the discussion

If you’re building reasoning systems, want to contribute ideas, or want to talk about AI-driven analytics, check out our open source project https://github.com/bagofwords1/bagofwords.